SVM

All code for this project is stored in this repository

Background and Motivation

SVM is short for “Support Vector Machine”. It’s a powerful supervised classification technique, which has a lot of intuitive rational behind it. In short, the basic idea is that we search for the best hyperplane to seperate two classes of data. Here is a good explanation, but in this post I’ll try to explain SVM in my own words as well.

Graphic Explanation

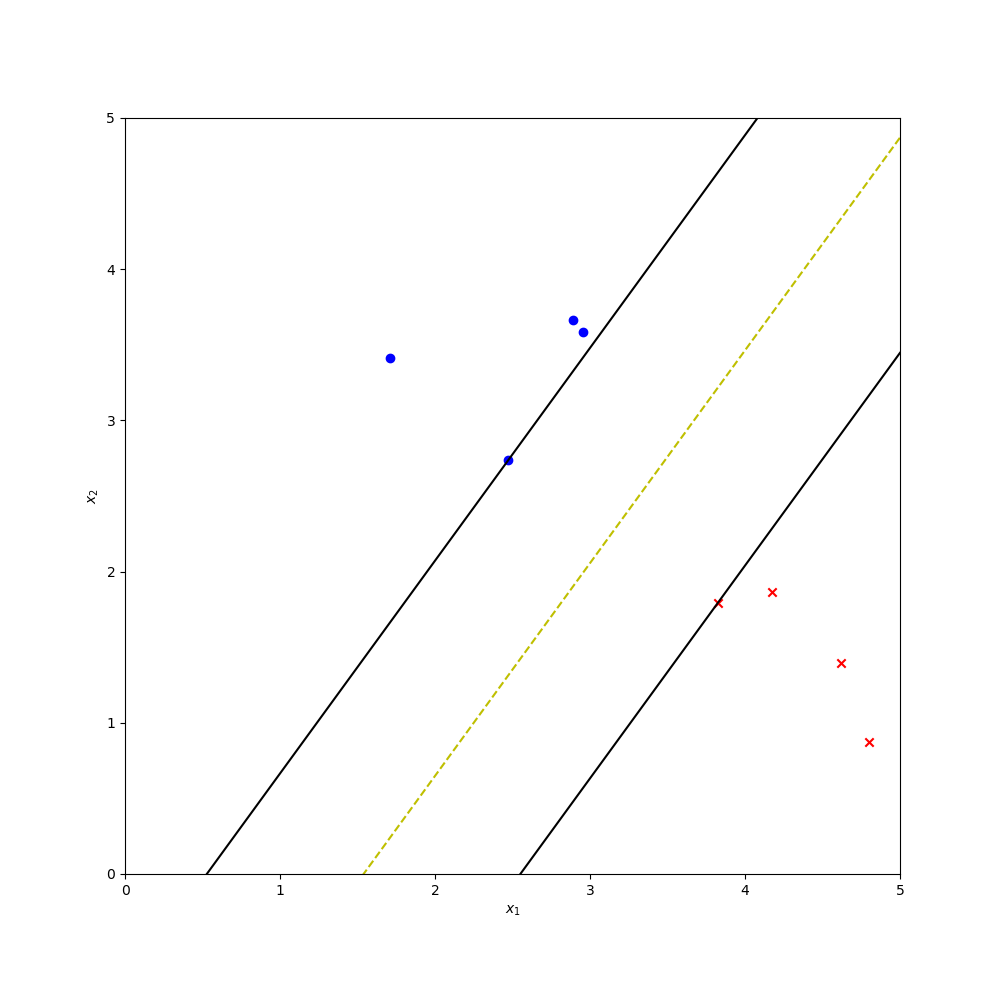

To get some graphical intuition, we’ll look at a 2D case of two clusters of points.

Red and blue points are clusters of seprate classes (this is supervised learning so the class is known)

The yellow line seperating the clusters is the SVM hyperplane (a line in 2D), which is essentially what we aim to find with this algorithm.

An “optimal” seperating hyperplane is one which is at the (same) maximal distance from the edges of both clusters.

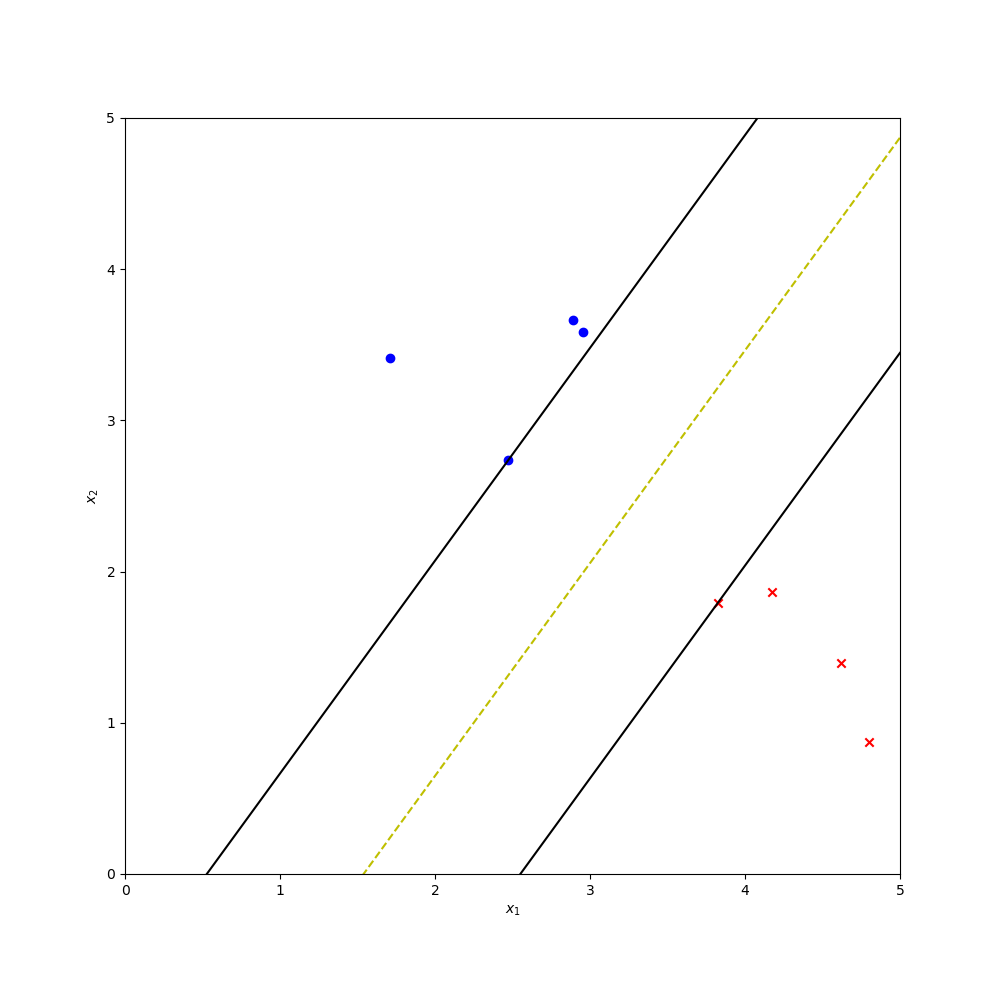

The last statement is a bit vague, so let’s try to understand that in our example. A closer look:

The vectors x+ and x- are pointing to the edge points in each cluster (+ and - chosen arbitrarily as classes)

Denote the hyperplane parameters as:

w*x+b=0